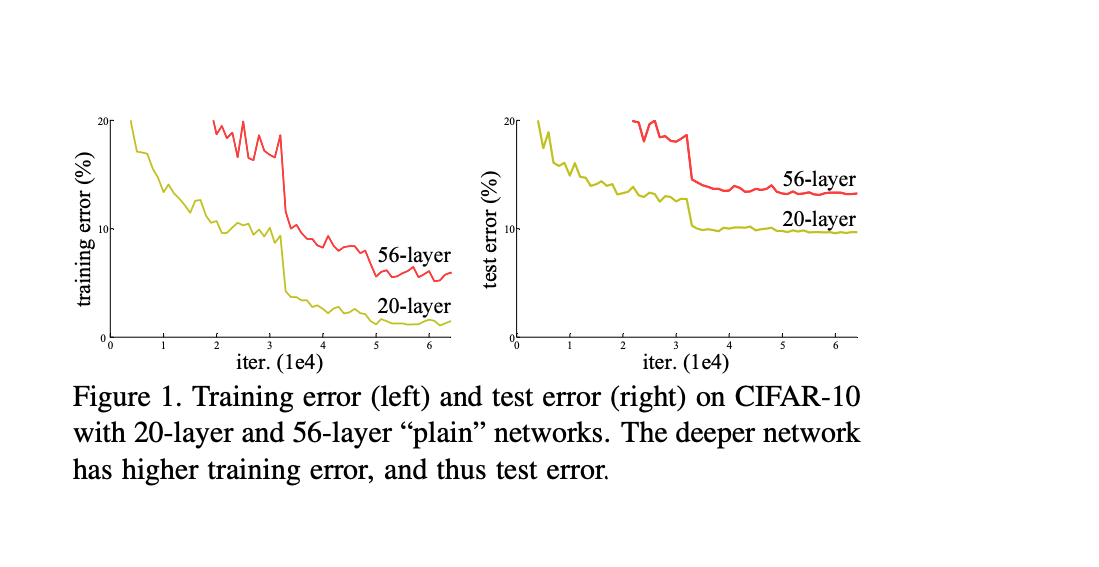

Deep residual nets are foundations of our submissions to ILSVRC & COCO 2015 competitions, where we also won the 1st places on the tasks of ImageNet detection, ImageNet localization, COCO detection, and COCO segmentation. We have not found one that explores the back- bone. Solely due to our extremely deep representations, we obtain a 28% relative improvement on the COCO object detection dataset. In conclusion, most traditional backbone networks are usually designed for image classification or FSOD. The depth of representations is of central importance for many visual recognition tasks. We also present analysis on CIFAR-10 with 1 layers. This result won the 1st place on the ILSVRC 2015 classification task. An ensemble of these residual nets achieves 3.57% error on the ImageNet test set. On the ImageNet dataset we evaluate residual nets with a depth of up to 152 layers-8x deeper than VGG nets but still having lower complexity. We provide comprehensive empirical evidence showing that these residual networks are easier to optimize, and can gain accuracy from considerably increased depth. We explicitly reformulate the layers as learning residual functions with reference to the layer inputs, instead of learning unreferenced functions. We present a residual learning framework to ease the training of networks that are substantially deeper than those used previously. Deep residual nets are foundations of our submissions to ILSVRC & COCO 2015 competitions, where we also won the 1st places on the tasks of ImageNet detection, ImageNet localization, COCO detection, and COCO segmentation.Deeper neural networks are more difficult to train. Solely due to our extremely deep representations, we obtain a 28% relative improvement on the COCO object detection dataset.

This result won the 1st place on the ILSVRC 2015 classification task. Solely due to our extremely deep representations, we obtain a 28 relative improvement on the COCO object detection dataset. On the ImageNet dataset we evaluate residual nets with a depth of up to 152 layers-8x deeper than VGG nets but still having lower complexity. Artificial Neural Networks (ANNs) have achieved great success in many tasks, including image classification 28, 52, 55, object detection 9, 34, 44. The residual networks described in this paper won the ILSVRC 2015 classification task and many other competitions. 2015), which describes a method of making convolution neural networks with a depth of up to 152 layers trainable. This is the classic ResNet or Residual Network paper (He et al.

We explicitly reformulate the layers as learning residual functions with reference to the layer inputs, instead of learning unreferenced functions. Deep Residual Learning for Image Recognition. Deeper neural networks are more difficult to train. In image recognition, VLAD stead of hoping each few stacked layers directly fit a 18 is a representation that encodes by the residual vectors desired underlying mapping, we explicitly let these lay- with respect to a dictionary, and Fisher Vector 30 can be ers fit a residual mapping.

0 kommentar(er)

0 kommentar(er)